Topics

Key components for scaleable and resilient applications

- Application Gateway

- Azure Load balancer

- Availability Set

- logical grouping for isolating VM resources from each other (run across multiple physical servers, racks, storage units, and network switches)

- For building reliable cloud solutions

- Availability Zone

- Groups of data centers that have independent power, cooling, and networking

- VMs in availability zone are placed in different physical locations within the same region

- It doesn’t support all VM sizes

- It’s available in all regions

- Traffic Manager: provides DNS load balancing to your application, so you improve your ability to distribute your application around the world. Use Traffic Manager to improve the performance and availability of your application.

Application Gateway vs. Traffic Manager: The traffic manager only directs the clients to the IP address of the service that they want to go to and the Traffic Manager cannot see the traffic. But Gateway sees the traffic.

Load balancing the web service with the application gateway

Improve application resilience by distributing the load across multiple servers and using path-based routing to direct web traffic.

- Application gateway works based on Layer 7

Scenario: you work for the motor vehicle department of a governmental organization. The department runs several public websites that enable drivers to register their vehicles and renew their driver’s licenses online. The vehicle registration website has been running on a single server and has suffered multiple outages because of server failures.

Application Gateway features

- Application delivery controller

- Load balancing HTTP traffic

- Web Application Firewall

- Support SSL

- Encrypt end-to-end traffic with TLS

Microsoft Learn offers many different learning materials. This learning module is about Application Gateway Theory and this learning module is the Practical part of the learning module. Microsoft Learn for the Application Gateway and Encryption.

Link to a sample code

– Terraform implementation of Azure Application Gateway

– Terraform implementation of Azure Application Gateway’ Backend pool with VM

– Terraform implementation of Azure Application Gateway’s HTTPS with Keyvault as Ceritficate Store

Load balancing with Azure Load Balancer

- Azure load balancer for resilient applications against failure and for easily scaling

- Azure load balancer works in layer 4

- LB spreads/distributes requests to multiple VMs and services (user gets service even when a VM is failed) automatically

- LB provides high availability

- LB uses a Hash-based distribution algorithm (5-tuple)

- 5-tuple hash map traffic to available services (Source IP, Source Port, Destination IP, Destination Port, Protocol Type)

- supports an inbound, and outbound scenario

- Low latency, high throughput, scale up to millions of flows for all TCP and UDP applications

- Isn’t a physical instance but only an object for configuring infrastructure

- For high availability, we can use LB with availability set (protect for hardware failure) and availability zones (for data center failure)

Scenario: You work for a healthcare organization that’s launching a new portal application in which patients can schedule appointments. The application has a patient portal and web application front end and a business-tier database. The database is used by the front end to retrieve and save patient information.

The new portal needs to be available around the clock to handle failures. The portal must adjust to fluctuations in load by adding and removing resources to match the load. The organization needs a solution that distributes work to virtual machines across the system as virtual machines are added. The solution should detect failures and reroute jobs to virtual machines as needed. Improved resiliency and scalability help ensure that patients can schedule appointments from any location [Source].

Link to a sample code to deploy simple Nginx web servers with Availability Set and Public Load Balancer.

Load Balancer SKU

- Basic Load Balancer

- Port forwarding

- Automatic reconfiguration

- Health Probe

- Outbound connections through source network address translation (SNAT)

- Diagnostics through Azure log analytics for public-facing load balancers

- Can be used only with availability set

- Standard Load Balancer

- Supports all the basic LB features

- Https health probe

- Availability zone

- Diagnostics through Azure monitor, for multidimensional metrics

- High availability (HA) ports

- outbound rules

- guaranteed SLA (99,99% for two or more VMs)

Load Balancer Types

Internal LB

- distributes the load from internal Azure resources to other Azure resources

- no traffic from the internet is allowed

External/Public LB

- Distributes client traffic across multiple VMS.

- Permits traffic from the internet (browser, module app, other resources)

- public LB maps the public IP and port of incoming traffic to the private IP address and port number of the VM in the back-end pool.

- Distribute traffic by applying the load-balancing rule

Distribution modes

- Lb distributes traffic equally among vms

- distribution modes are for creating different behavior

- When you create the load balancer endpoint, you must specify the distribution mode in the load balancer rule

- Prerequisites for load balancer rule

- must have at least one backend

- must have at least one health probe

Five tuple hash

- default of LB

- As the source port is included in the hash and can be changed for each session, the client might be directed to a different VM for each session.

source IP affinity / Session Affinity / Client IP affinity

- this distribution is known as session affinity/client IP affinity

- to map traffic to the server, the 2-tuple hash is used (Source IP, Destination IP) or the 3-tuple (Source IP, Destination IP, Protocol)

- Hash ensures that requests from specific clients are always sent to the same VM.

Scenario: Remote Desktop Protocol is incompatible with 5-tuple hash

Scenario: for uploading media files this distribution must be used because for uploading a file the same TCP session is used to monitor the progress and a separate UDP session uploads the file.

Scenario: The requirement of the presentation tier is to use in-memory sessions to store the logged user’s profile as the user interacts with the portal. In this scenario, the load balancer must provide source IP affinity to maintain a user’s session. The profile is stored only on the virtual machine that the client first connects to because that IP address is directed to the same server.

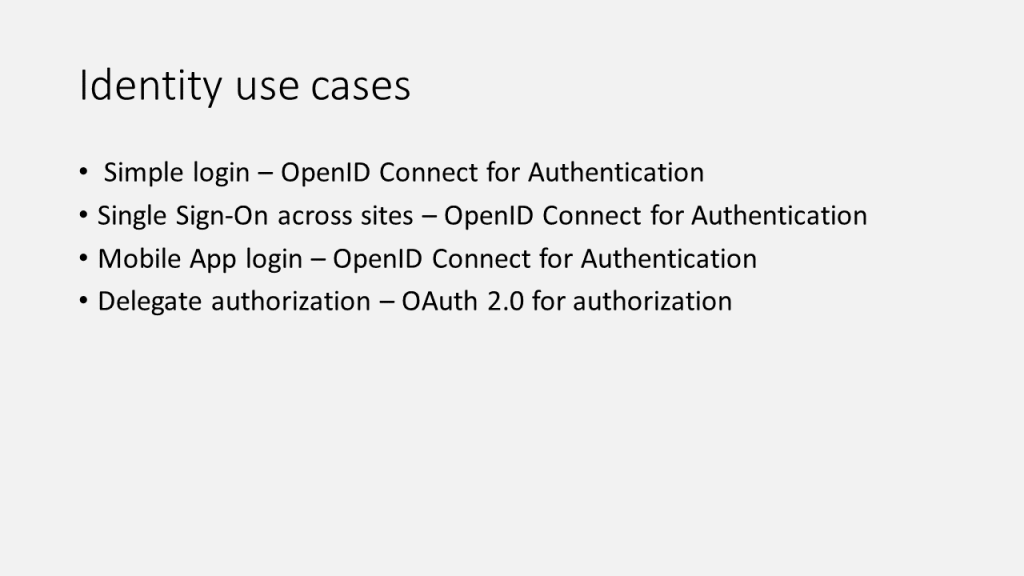

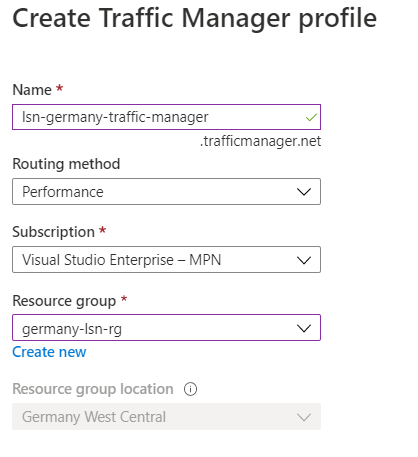

Enhance service availability and data locality with Traffic Manager

Scenario: a company that provides a global music streaming web application. You want your customers, wherever they are in the world, to experience near-zero downtime. The application needs to be responsive. You know that poor performance might drive your customers to your competitors. You’d also like to have customized experiences for customers who are in specific regions for user interface, legal, and operational reasons.

Your customers require 24×7 availability of your company’s streaming music application. Cloud services in one region might become unavailable because of technical issues, such as planned maintenance or scheduled security updates. In these scenarios, your company wants to have a failover endpoint so your customers can continue to access its services.

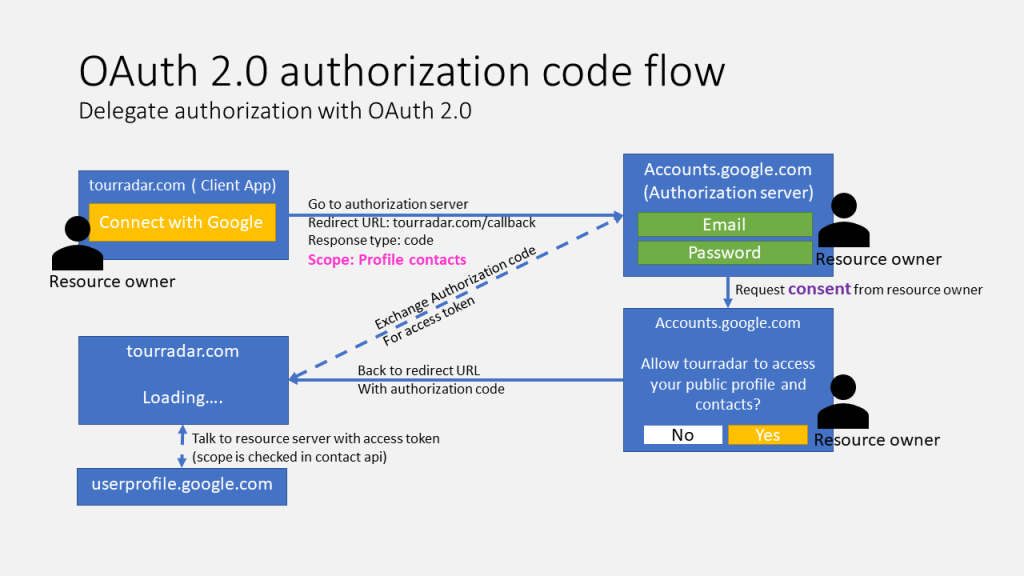

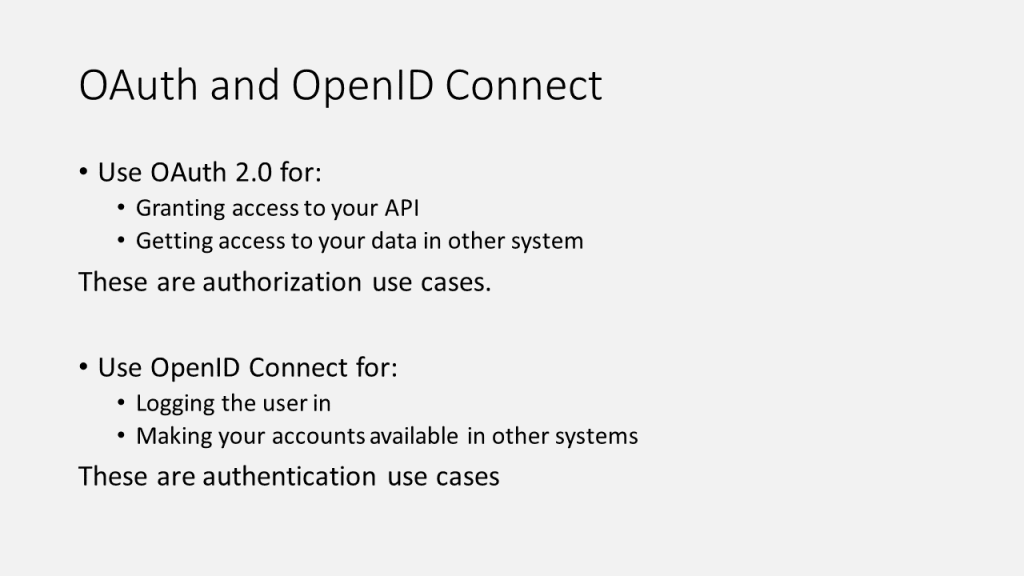

- traffic manager is a DNS-based traffic load balancer

- Traffic Manager distributes traffic to different regions for high availability, resilience, and responsiveness

- it resolves the DNS name of the service as an IP address (directs to the service endpoint based on the rules of the traffic routing method)

- it’s a proxy or gateway

- it doesn’t see the traffic that a client sends to a server

- it only gives the client the IP address of where they need to go

- it’s created only Global.

Traffic Manager Profile’s routing methods

- each profile has only one routing method

Weighted routing

- distribute traffic across a set of endpoints, either evently or based on different weights

- weights between 1 to 1000

- for each DNS query received, the traffic manager randomly chooses an available endpoint

- probability of choosing an endpoint is based on the weights assigned to endpoints

Performance routing

- with endpoints in different geographic locations, the best performance endpoint for the user is sent

- it uses an internet latency table, which actively track network latencies to the endpoints

Geographic routing

- based on where the DNS query originated, the specific endpoint of the region is sent to the user

- it’s good for geo-fence content e.g. it’s good for countries with specific terms and conditions for regional compliance

Multivalue routing

- to obtain multiple healthy endpoints in a single DNS query

- caller can make client-side retries if endpoint is unresponsive

- it can increase availability of service and reduce latency associated with a new DNS query

Subnet routing

- maps a set of user ip addresses to specific endpoints e.g. can be used for testing an app before release (internal test), or to block users from specific ISPs.

Priority routing

- traffic manager profile contains a prioritized list of services

Traffic Manager Profile’s endpoints

- endpoint is the destination location that is returned to the client

- Types are

- Azure endpoints: for services hosted in azure

- Azure App Service

- public ip resources that are associated with load balancers, or vms

- External endpoints

- for ip v4/v6

- FQDNs

- services hosted outside azure either on-prem or other cloud

- Nested endpoints: are used to combine Traffic Manager profiles to create more flexible traffic-routing schemes to support the needs of larger, more complex deployments.

- Azure endpoints: for services hosted in azure

- Each traffic manager profile can have serveral endpoints with different types

Link to a sample code to deploy a Traffic Manager.

Source: https://docs.microsoft.com/en-us/learn/modules/distribute-load-with-traffic-manager/